ChatGPT Maker Suspects China’s Dirt Cheap DeepSeek AI Models Were Built Using OpenAI Data — and the Irony Is Not Lost on the Internet

OpenAI suspects China's DeepSeek AI models, significantly cheaper than Western counterparts, were trained using OpenAI data, sparking controversy and market turmoil. DeepSeek's R1 model, built on the open-source DeepSeek-V3, reportedly cost only $6 million to train, a fraction of Western AI model development costs. This revelation caused a sharp decline in AI-related stocks, with Nvidia experiencing its largest single-day loss in history.

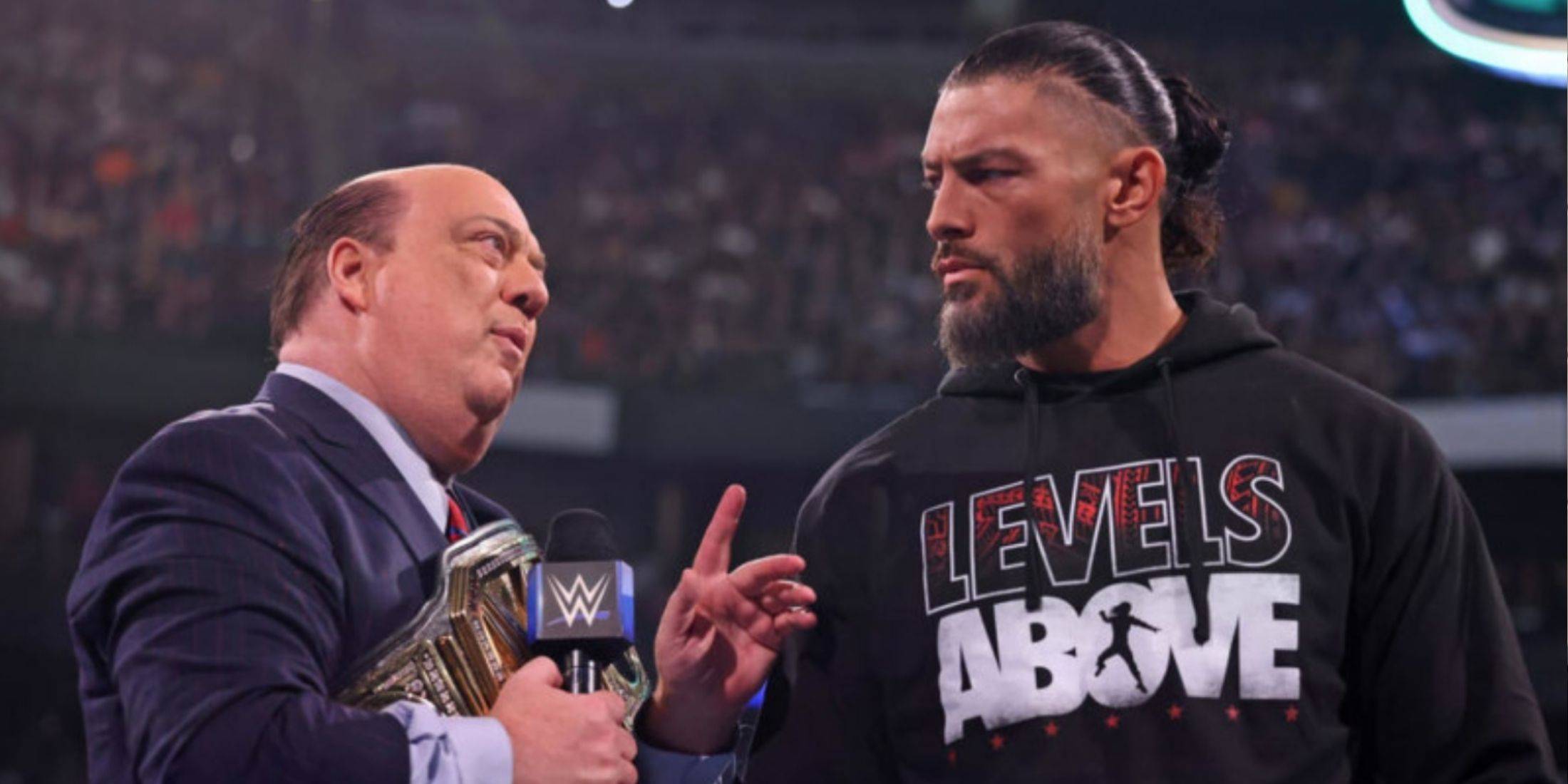

The incident prompted OpenAI and Microsoft to investigate whether DeepSeek violated OpenAI's terms of service by using its API for model distillation – a technique where data is extracted from larger models to train smaller ones. OpenAI confirmed its awareness of such attempts by Chinese and other companies and emphasized its commitment to protecting its intellectual property (IP) through various countermeasures and collaboration with the U.S. government.

Donald Trump, referencing DeepSeek, called for a wake-up call for the U.S. tech industry. His AI czar, David Sacks, further indicated strong evidence of DeepSeek's use of OpenAI models.

This situation highlights the irony of OpenAI's position, given its own past practices. OpenAI previously argued that creating AI models like ChatGPT is impossible without using copyrighted material, a claim supported by their submission to the UK's House of Lords. This stance is further complicated by ongoing lawsuits from the New York Times and 17 authors accusing OpenAI and Microsoft of copyright infringement. OpenAI maintains that its training practices constitute "fair use." The legal battles surrounding the use of copyrighted material in AI training are intensifying, particularly in light of a 2018 U.S. Copyright Office ruling that AI-generated art cannot be copyrighted.

![FurrHouse [Ch. 3]](https://images.dshu.net/uploads/30/1719555089667e54115d59f.jpg)